Carbon and sustainability Technology

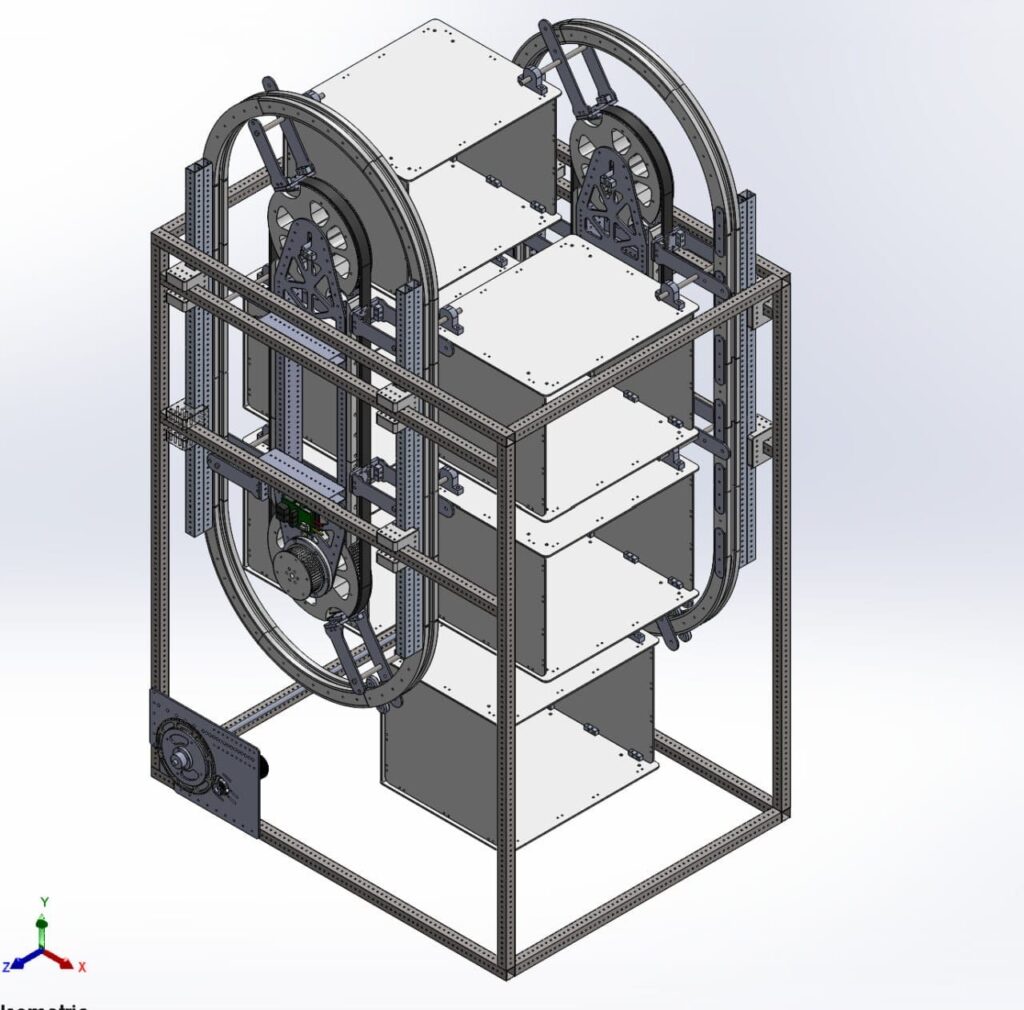

Projects by initiated by Student Interest Groups Project information Project descriptions WeGoALGAE is a SIG focused in research on microalgae, we develop microalgae photobioreactors, also known as microalgae air purifiers. Our microalgae air purification technology reduces CO2 and more, our air purifiers are more sustainable than commercial air purifiers and more efficient than traditional vascular […]

Carbon and sustainability Technology Read More »